Evidence Of Afterlife - Virtual Reality

Evidence: If virtual reality software can store space-time within its computer memory, then the mind can store space-time within its memory too.

Abstract

This case discusses how virtual reality software, specifically Blender, can help explain the concept of the afterlife. It highlights four key elements of virtual reality that relate to the afterlife. The human mind and 3D software share similarities in processing and organizing complex information, revealing how technology mirrors cognitive functions.

1. What Specific Evidence Are We Looking For?

Three-dimensional (3D) software is a specialized tool used to create and manipulate virtual objects within a digital space, with Blender being a prominent example. The human mind and 3D software share similarities in processing and organizing complex information, revealing how technology mirrors cognitive functions. Interestingly, 3D software plays a role in understanding concepts related to the afterlife, as it effectively visualizes abstract ideas like awareness, memory, and spatial perception. Blender, initially developed in 1994 by Ton Roosendaal for NeoGeo, transitioned from a proprietary tool to an open-source platform after a successful community-driven campaign in 2002. Since then, Blender has thrived under the Blender Foundation, benefiting from global contributions and fostering a dynamic community of creators. Its evolution demonstrates the power of innovation, collaboration, and accessibility in shaping the modern 3D design landscape.

2. Using Blender to Explain Afterlife's Geometric Concepts

Blender 3D software is used as a tool to illustrate the Theory of Afterlife by exploring five geometric concepts: space, awareness, time, birth, and memory. These concepts, essential to the theory, are explained through the lens of 3D modeling, where the environment, objects, and time exist within memory, just as the theory posits for human experience. Blender's 3D space parallels how physical surroundings might be stored in memory, awareness is likened to the camera's point of view, and time is shown as accessible past moments along a timeline. Birth is represented by the activation of the camera, and memory is demonstrated as a complete repository of all modeled elements. This comparison highlights a shift from conventional interpretations of life to one where the surrounding environment and events are internalized, emphasizing how 3D software helps make abstract concepts of life and afterlife more tangible and understandable.

3. Enhanced Similarities Between 3D Software and Human Reality

The human brain constructs a three-dimensional (3D) model of reality by integrating sensory inputs, a process akin to 3D modeling in software. This internal model, rather than the external environment, constitutes what we perceive as reality, shaped by neural processes in areas like the parietal lobe and hippocampus. Advances in neuroscience and phenomena like optical illusions emphasize the brain's active role in synthesizing reality, suggesting that it is bound to perception rather than existing independently. Virtual reality (VR) parallels this concept by creating immersive, interactive environments that replicate sensory and spatial experiences, offering a tangible demonstration of how the mind models reality. These similarities underscore the intricate relationship between perception, cognition, and existence, challenging traditional views of objective reality and highlighting the mind's creative modeling processes.

4. Conclusion: How 3D Modeling Provides Evidence for Afterlife

3D modeling concepts provide a unique framework for understanding existence and the afterlife, drawing parallels between virtual environments and human consciousness. In this model, physical space is limitless, awareness is a focal point (like a camera), and time is a manipulatable construct, much like a timeline in 3D software. The relationship between awareness (a single point) and memory (an infinite space) defines the awareness/memory model of existence. This extends to spacetime, where all past and future moments persist in memory. At the end of life, awareness undergoes a dimensional transformation, expanding from a single point to encompass all of space and time, akin to divine omnipresence. This theory implies that consciousness is not extinguished at death but rather merges into an infinite realm. This realm has unlimited time and space and exists inside our memory. This theory aligns with spiritual concepts of afterlife.

Evidence Confirmation

1. What Specific Evidence Are We Looking For?

1.1. What Is Three-Dimensional (3D) Software?

Three-dimensional (3D) software refers to specialized computer programs used to create, manipulate, and render three-dimensional models, animations, and simulations. These tools allow users to design virtual objects by defining their geometry, texture, lighting, and motion within a 3D space. One popular 3D software is Blender. Blender tailored to specific needs like animation, industrial design, or gaming. With advancements in technology, 3D software has also integrated capabilities for virtual reality (VR), expanding its role in immersive experiences [1].

1.2. The Similarities Between the Human Mind and 3D Software

The human mind and 3D software are remarkably similar in their underlying functions and purposes. Both are intricate systems designed to process, manipulate, and represent complex information. While the mind is an organic construct developed through millions of years of evolution, 3D software is a man-made tool refined over decades of technological advancement. Despite these different origins, their parallels offer profound insights into how humans conceptualize, create, and understand the world [2].

The similarities between the human mind and 3D software underscore the profound ways in which technology mirrors natural cognitive processes. Both systems are designed to process, organize, and represent complex information within a 3D environment. By examining these parallels, we gain a deeper understanding of human cognition, highlighting the symbiotic relationship between humanity and 3D software capabilities [3].

1.3. 3D Software and Its Role as Evidence of Afterlife

Both 3D software and Proof of Afterlife are heavily dependent on geometry. At the base of objects in a 3D environment, is geometry. Geometry describes surrounding space and the objects within. Geometry is compact in terms of the amount of memory required, however, it creates models and environments that are large in a physical sense.

The Theory of Afterlife is very much dependent upon geometry. The proof requires definition and understanding of concepts such as memory, awareness, point of view, surrounding space, and time. These concepts can be hard to describe and even harder to understand. Understanding them, however, is critical to completing the proof.

Fortunately, 3D software uses the same concepts of awareness, memory, etc. What makes 3D software important to proving afterlife is these concepts are manifested within the software. For example, we can discuss how awareness is a point of view. We can liken it to a point within the environment. While this description is true, however, it does not impart the meaning fully. It is much easier, and more descriptive to simply state that awareness is like the camera in 3D software. A definition like this is easier to visualize and understand. 3D software takes these urethral concepts and quantifies them. Everyone knows what a camera is. Everyone knows you see the scene through the lens of the camera. When you liken the camera to awareness, it imparts a vivid, unambiguous, and accurate meaning. To teach is one thing. To show is better. 3D software shows these concepts. It does a better job of explaining them than I can. That is what we want.

3D software was born out of a need to recreate reality using a computer. It creates the environment. It creates the objects within the environment. It creates time. It does all the things necessary to recreate reality. 3D software does not, in itself, provide evidence for afterlife. We can't say that afterlife exists because 3D software exists. But what it does do is help us define our surrounding environment in a new way. It also shows that a physical 3D environment can be stored successfully in memory. 3D software is an important test case for the understanding of afterlife. We are going to take a look at a popular, open-source 3D software system called Blender. We are going to use Blender to help us define the geometric concepts of afterlife.

1.4 The Background and Development of Blender 3D Software

Blender is a powerful and versatile open-source 3D creation suite. It has gained widespread acclaim for its capabilities in modeling, animation, rendering, and more. The software's history and development reflect a unique blend of innovation, community engagement, and perseverance. Blender is a standout software tool in the world of 3D design and animation.

Background and Early History

Blender's roots can be traced back to 1994, when Ton Roosendaal, a Dutch software developer and founder of NeoGeo, one of the largest animation studios in the Netherlands at the time, began developing an in-house application for 3D content creation. This software was intended to streamline NeoGeo's animation workflow, which was previously reliant on commercial software that lacked the flexibility and efficiency required for their projects.[4]

The initial version of Blender was written as a proprietary tool, but its potential soon became evident. In 1998, Roosendaal established Not a Number (NaN), a company dedicated to marketing and developing Blender as shareware. NaN aimed to make 3D software more accessible to small studios and individual creators. Blender's early versions were appreciated for their compact size and robust feature set, but the company struggled financially.

Transition to Open Source

In 2002, NaN faced bankruptcy, and Blender's future was uncertain. However, Roosendaal proposed a groundbreaking solution: to make Blender open-source. He launched the "Free Blender" campaign to raise the amount needed to purchase the rights to Blender's source code from NaN's investors. Remarkably, the campaign succeeded in just seven weeks, thanks to overwhelming support from the community. In October 2002, Blender was officially released under the GNU General Public License (GPL), marking the beginning of a new era.[5]

Development and Growth

Blender's development flourished as an open-source 3D software program. The Blender Foundation, established by Roosendaal in 2002, coordinated its growth and community engagement. Developers from around the world contributed to the project, rapidly expanding its capabilities.

Blender's open-source nature also fostered a vibrant ecosystem of plugins and community-driven innovations. The Blender Market and other platforms emerged, allowing creators to share and sell assets, scripts, and add-ons, further enriching the software's functionality.

Impact and Community Engagement

Blender's success is deeply intertwined with its community. The Blender Foundation organizes initiatives like the Blender Development Fund, where individuals and organizations can contribute financially to support development. Blender's journey from an in-house tool to a globally recognized open-source 3D software exemplifies the power of collaboration and innovation. Its continuous development, driven by a passionate community and visionary leadership, has established Blender as a cornerstone of the 3D creation world. Today, it stands as a testament to what can be achieved through shared creativity, determination, and cooperation.[6]

2. Using Blender to Explain Afterlife's Geometric Concepts

2.1. Introduction to the Five Geometric Concepts of Afterlife

Blender 3D software is fully three-dimensional. It is used for rendering scenes. To make it truly realistic, however, it also contains time. Inside a virtual reality environment, you have unlimited length, width, and depth. You also have depth in time as well. We will use Blender 3D software to aid the explanation of life's basic concepts. Blender is well-known, widely used, and open source. It also happens to be one of the best, and most complete 3D modeling and rendering software available at any price.

Blender is a 3D computer graphics software toolset used for creating animated films, visual effects, art, 3D-printed models, motion graphics, interactive 3D applications, virtual reality, and video games. Blender's features include 3D modeling, UV mapping, texturing, digital drawing, raster graphics editing, rigging and skinning, fluid and smoke simulation, particle simulation, soft body simulation, sculpting, animation, match moving, rendering, motion graphics, video editing, and compositing.

Throughout the Theory of Afterlife, we have stressed the difference between how most people view the world and how we view the world. Most people see their surrounding environment as being outside of their body. We call this worldview the "inside/outside" model of life. We at Proof of Afterlife see things differently. We see our surrounding environment as being inside memory. We call our worldview the "awareness/memory" model of life. In the sections that follow, we are going to explain what the world sees as true, versus what the theory feels is true with supporting evidence from 3D software.

Proof Of Afterlife was not discovered using 3D software, however the similarities between it and life are striking. There is one word of caution though. Afterlife will not be like virtual reality. Afterlife is an expansion of awareness that occurs at the end of life. Virtual Reality, on the other hand, does not do that. Virtual reality is an environment containing a camera that emulates life, not afterlife. Virtual reality does not duplicate, approach, or define afterlife in any way. However, it does model life quite well. Therefore, it is a useful model to explain the main concepts of life that we can extend to show how afterlife works. These concepts are: Space, Awareness, Time, Birth, and Memory.

These concepts are the building blocks of the Theory of Afterlife, so we will go through them next.

2.2. Geometric Concept One: Physical Space

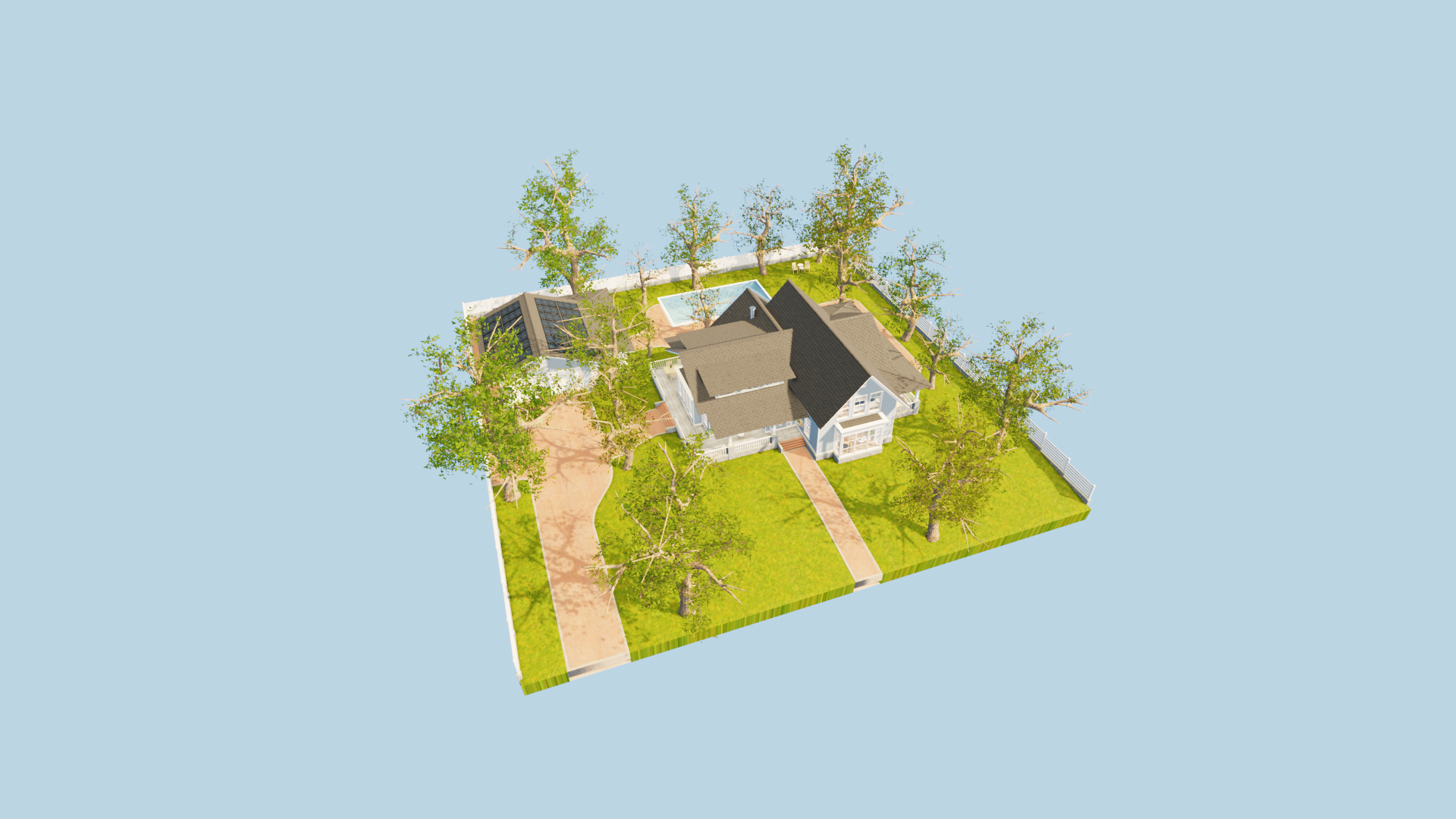

I purchased this model to demonstrate how three-dimensional space can be loaded into memory. I selected this model because it feels like you could be standing there, in the front yard. This is a view of the house, garden, and pool from high above.

Here is what the author has to say about the model: This model contains a complete furnished house with a garden. Everything is textured and the lighting is completely baked for fast renders. This model can be used for commercial purposes and works with real-time game engines. This is a classic style porch house with furnished interior for Blender and set in today's era. It is inspired by the East Coast USA classic house design and an original twist. This is an originally designed and modeled house and doesn't exist in the real world.[7]

Ironically, the author refers to the real world to differentiate from the virtual world. The purpose of this section is to show how the real world could conceivably be the real world. A major assertion of proof of afterlife is the mind extends out, beyond the body, to the limit of the environment. The idea is to mentally conceive of the real world as a model like this within memory. This scene uses just 833 megabytes of memory. It is possible that a three-dimensional model, like this one could exist within the mind's memory. It is within memory where the environment is realized. The real-world model would be better and more complete, but you can see by looking at this that such a model within memory could be possible.

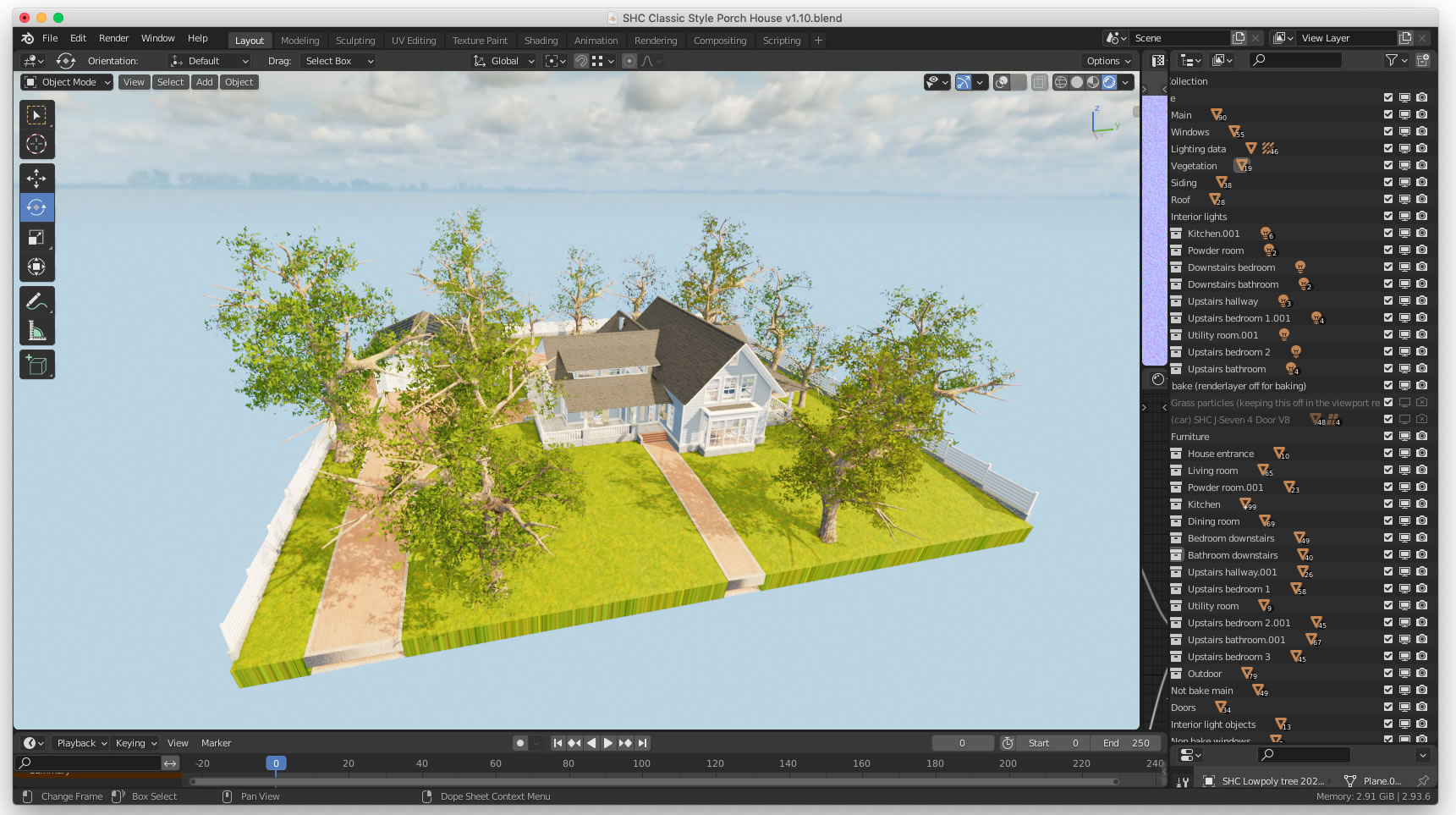

Here is the same model only surrounded by the control panels of Blender software. Blender software contains all the tools needed to construct the model. In the human mind, reality is modeled on the fly as it presents itself. This model contains 1639 objects, 2.25 million edges, and 1.1 million faces. All this is contained in less than 1 gigabyte of memory. I do believe the mind uses geometry to convert space to memory, or some derrivation of it. These memory requirements are nothing the human mind couldn't handle. Here is how the two models of life differ in their interpretation of physical space:

The Conventional Interpretation of Physical Space:

Until now, most people regard the world around them as the external world. They regard their thoughts and emotions as being inside their mind. They regard their physical surroundings as being outside their mind. Thus, they see physical space as outside of their body and mind.

The 3D Modeling Interpretation of Physical Space:

3D software regards physical space as being inside computer memory. In 3D software, the surrounding space is a computer model. It was put together in a process called modeling. This 3D model, of the outside world, exists inside the memory of a computer. Since the outside world resides inside memory, and memory exists inside the mind, then the outside world is also inside the mind. With the awareness/memory model, there is no outside. Everything - consciousness, thoughts, and the physical world - exists inside memory.

2.3. Geometric Concept Two: Awareness

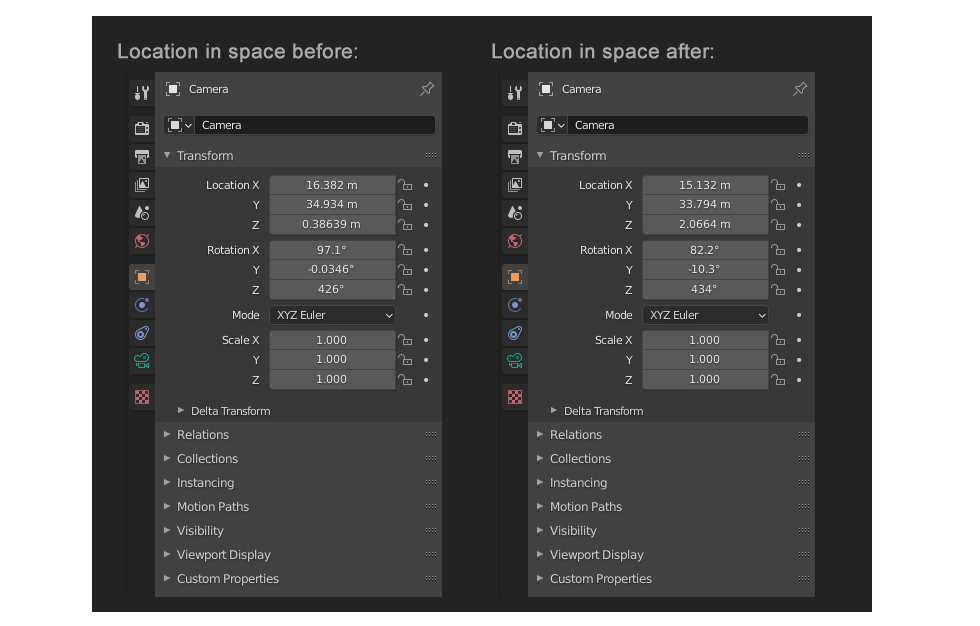

In virtual reality software, conscious awareness is represented by the active camera within the scene. This is the point of view that we view the model from. In the image below, we have placed a camera into the scene, looking at the front door. The camera coordinates are shown below.

Notice the geometric dimensions and settings of the active camera within the scene in the image below. The camera is located at a geometric point. The camera's settings are shown in the "Location in space before" panel shown below left. The camera has a specific location, 16.382 in X, 34.934 Y, and .38539 in Z. The camera is located at a single point within the model. It is likened to our point of view during life.

Now we walk through the front door, turn left inside the house, and move into the living room. We turn our heads and look out through the picture window as shown below. The camera has moved. It now has new coordinates as shown above right, in the "Location in space after" area of the image. Specifically, the camera is now located at 15.132 in X, 33.794 in Y, and 2.0664 in Z. Here is what we see from this new point of view:

Displacement from the point before to the point after, illustrates how awareness moves in time and space. This is how the two models of life differ in their interpretation of awareness:

The Conventional Interpretation of Awareness:

Most people do not pay much attention to the physical location of their point-of-view during life. We pay little heed to where awareness is within space or where it is within time. Nor do we give much thought to the physical size of awareness. Admittedly, there is little reason to contemplate this during life. We tend to see awareness as a fuzzy ball, somewhat focused, that "pays attention" to some things and ignores other things. Typically, awareness is not well defined in this way.

The 3D Modeling Interpretation of Awareness:

3D software is much more precise in its representation of awareness. Awareness, in 3D software, is the camera. The camera is live, meaning it is active and perceives the environment. Likening human awareness to the camera allows us to be more focused and precise in our interpretation. First, awareness is located at a specific point within the environment. It has a specific X, Y, and Z coordinate location. Second, it has a specific location within time. Third, in terms of its size, awareness is a single point. As you can see in the "Location in Space" panel above, awareness is located at a point within the environment. In the awareness/memory model, awareness is defined as a single point of view within the environment.

2.4. Geometric Concept Three: Time

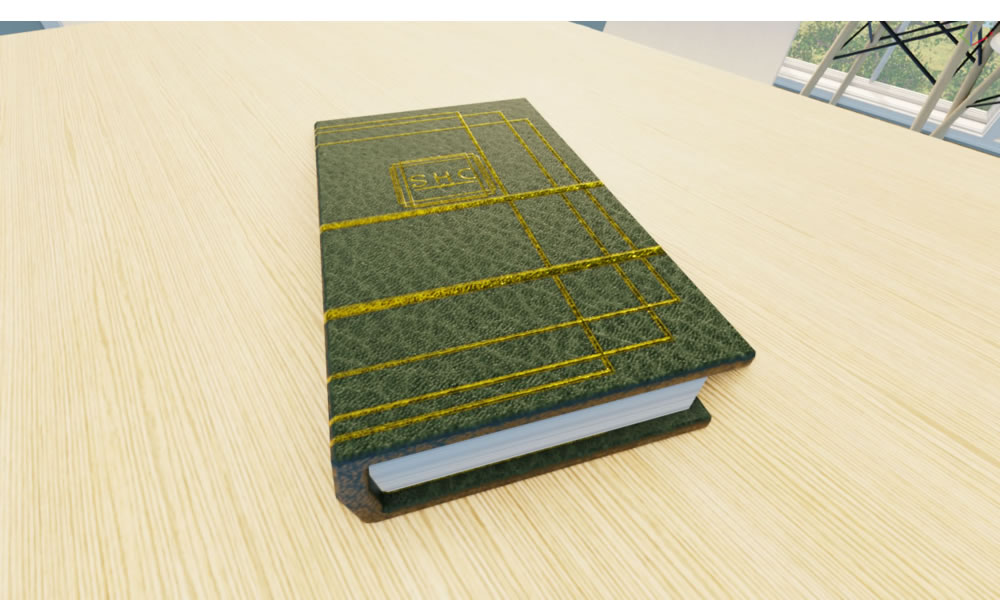

Now that we are in the house, we look at the clock on the wall. Oh, it's exactly 4:00 PM. We make a note of that. We also make a note of the book on the table. We are going to look at that next. We take a step toward the book. Then we look down at it. Then we note the time.

That moment, the present, is shown below, top. We are located at time zero within the virtual reality model as shown by the blue marker in the "Location in Time Before" section on the timeline below.

Then we take a few seconds to move in a take a closer look at the book. Time has moved a few seconds. That is shown by the blue marker in the "Location in Time After" section on the timeline above, bottom. The time marker was at zero. It is now at 3. We have moved in time.

3D software demonstrates how time works. It isn't that we have made a copy of the environment at time zero. We haven't moved it or modified it. Time zero environment exists in memory. It was the present. Now it's the past. Nothing has changed with the time zero moment. To access it or revisit it all we need to do is move the time marker back to zero. That is how virtual reality software works. The moments of the past still exist. They aren't copies of moments. They are the original moment, intact, unmoved, and unaltered. In 3D software, we merely need to move the time marker to return to the environment of the past. The original is still there. All original moments exist in full detail. They are not copies of the original moments. They are the original moments. Storing an environment is a property of memory.

The Conventional Interpretation of Time:

We live life from the perspective of being in the present moment. Moments of the past are regarded as being fuzzy, incomplete copies of present moments. We tend to define moments of the past based on what we can remember about them. For example, we may be able to remember what we had for lunch two days ago, also we may not. We tend to define past moments as what we can remember about them. If we can't remember, we regard the moment as having been dropped from memory.

The 3D Modeling Interpretation of Time:

3D software shows us that past moments are not lost. Nor are they dropped from memory. Past moments are simply located at different points along the timeline. Should we move the timeline back, we will be physically inside that moment. This isn't a copy of the moment. It is the original moment, intact. The only thing that has changed is the timeline pointer has moved.

2.5. Geometric Concept Four: Birth

The Conventional Interpretation of Brith:

We tend to see birth as a process that happens over time. We define this as beginning with a cell, that begins to grow and develop over time. Typically, there is no defined notion about the exact location of where and when life starts. We simply do not know where this is.

The 3D Modeling Interpretation of Brith:

In 3D software, birth is when the camera turns on. In 3D modeling, birth (the camera) has a specific, single location in space. It is located at that point. There is also a specific moment in time when the camera goes from off to on. At that point, in both time and space, the camera begins to perceive its surrounding environment. That specific moment in time and space is defined as birth in the context.

2.6. Geometric Concept Five: Memory

The Conventional Interpretation of Memory:

Memory, in a conventional sense, is loosely related to remembering. We tend to view memory as what we can remember. For example, someone who has a "good memory" can recall more events from the past than someone who does not have a good memory. We tend to believe that the person with a good memory has a larger and more robust memory. A person who does not have a good memory is seen as having events that drop out or become lost. Their memory is regarded as smaller and less robust.

The 3D Modeling Interpretation of Memory:

3D software shows us there is no such thing as a bad memory. 3D models are created and stored in memory. 3D renderings are created from these models. All the work and data that goes into creating a 3D environment, stays in the computer's memory. Memory, in terms of 3D software, is regarded as what is memory. For example, when you create a 3D model, you throw down a folder. Everything that goes into that model, goes into that folder. The contents of the folder, and what goes into the scene, are in memory.

3. Enhanced Similarities Between 3D Software and Human Reality

3.1. Does 3D Modeling Take Place in the Brain?

The human brain is a marvel of evolution, capable of processing vast amounts of information to create coherent perceptions of the world. Among its many abilities, the brain constructs a three-dimensional (3D) model of our environment to guide interaction and navigation. The conventional view of the environment is that exists outside the mind. Under this view, we tend to believe that we interact directly with the outside environment.

A more accurate way to interpret this is to imagine that the brain takes in sensory stimuli and assembles a 3D model of the outside world inside the mind. Instead of interacting directly with the outside world, a better way to see it is that we interact with our internal model instead. Thus, perceiving reality becomes a three-step process. First, we take in stimuli from the outside world. Second, we assembled that database into a model of the world using modeling techniques. Third, we interact with our internal model. It is the perception of our internal model that provides reality, not the outside world.

The Mechanisms of 3D Modeling in the Brain

The brain creates a 3D model by integrating sensory information, primarily from vision, touch, and proprioception. The visual system plays a central role, as it interprets depth, distance, and spatial relationships through cues like stereopsis (binocular disparity), motion parallax, and perspective. The primary visual cortex (V1) processes basic visual features, while higher-order areas like the parietal lobe synthesize these into spatial representations.[8]

The parietal lobe, particularly the posterior parietal cortex, is essential for spatial awareness. It integrates sensory inputs to form a cohesive map of the external environment, enabling functions like object recognition and navigation.[9] Neuroimaging studies have demonstrated that specific regions, such as the lateral intraparietal area, are involved in encoding depth and spatial relationships.[10]

Moreover, the hippocampus contributes to 3D modeling by creating cognitive maps - mental representations of spatial layouts. Research on place cells and grid cells reveals how the hippocampal formation encodes an organism's position and orientation within an environment.[11] This encoding allows individuals to navigate complex spaces and remember spatial arrangements.

Evidence from Neuropsychology and Neuroscience

Studies of brain lesions provide insights into how 3D modeling occurs. Damage to the parietal lobe can result in spatial neglect, where individuals lose awareness of one side of their environment.[12] This phenomenon underscores the parietal lobe's role in constructing spatial models.

Neuroimaging techniques, such as functional magnetic resonance imaging (fMRI) and positron emission tomography (PET), have revealed the activation of specific brain areas during tasks requiring spatial reasoning or depth perception.[13] For instance, the dorsal visual stream, often called the "where pathway," processes spatial location and movement, while the ventral stream, or "what pathway," identifies object characteristics.[14]

Computational Modeling and Artificial Intelligence

Advancements in artificial intelligence (AI) offer analogies to how the brain models 3D space. AI systems for computer vision use algorithms inspired by neural processes to interpret depth and spatial relationships. Convolutional neural networks (CNNs), for example, mimic hierarchical visual processing in the brain, providing insights into how biological systems might construct 3D models.[15]

Conclusion

3D modeling in the brain is a dynamic process involving multiple regions and systems. By integrating sensory inputs and encoding spatial relationships, the brain constructs a 3D model of surrounding space, similar to the modeling process in 3D software. We perceive, feel, and interact with the model, not the surrounding physical space. Advances in neuroscience and AI continue to uncover the complexities of this remarkable capability, with profound implications for the theory of afterlife.

3.2. Is Reality Already Within the Mind the Moment We Realize It?

The question of whether reality exists independently of the mind or is fundamentally created by it has long intrigued philosophers, scientists, and thinkers. From the cognitive sciences of today, a common thread emerges: reality as we know it is inseparably bound to perception. This section explores the notion that reality is already inside the mind the moment we experience it.

Neuroscience and Perception

Modern neuroscience reinforces this view by illustrating how the brain constructs reality. Sensory input, while crucial, is not sufficient for the full experience of reality. The brain integrates incoming stimuli with prior knowledge, expectations, and contextual information to generate what we perceive as the external world.[16] For instance, optical illusions demonstrate how the brain can "misinterpret" sensory data, creating a version of reality that deviates from external stimuli.

Moreover, studies of phenomena such as blindsight and hallucinations suggest that perception is not merely a reflection of external reality but a synthesis generated by neural processes. This aligns with the idea that what we experience as reality is an internal 3D model, shaped by both external inputs and internal frameworks.[17]

Psychological Insights

Psychology further underscores the role of the mind in creating reality. Cognitive biases, for example, influence how we interpret information, leading to subjective realities that may diverge significantly from the outside world. Confirmation bias, where individuals favor information that aligns with their preexisting beliefs, illustrates how the mind's expectations create perception.[18]

Constructivist theories of learning, such as those proposed by Jean Piaget, also highlight how individuals actively build their understanding of the world. This supports the notion that reality, as experienced, is a construct rather than an absolute entity.[19]

Conclusion

The idea that reality is already inside the mind the moment we experience it carries profound implications. It challenges the assumption of an objective, observer-independent reality and suggests that what we consider "reality" is an internal model. By combining insights from neuroscience and psychology, we can appreciate that reality as experienced is not a straightforward reflection of the external world but a rich, dynamic model constructed inside of the mind. This does not negate the existence of an external reality but underscores the intricate interplay between perception and existence. Reality, as it appears to us, comes from the mind's creative modeling processes. Therefore, outside space is not directly perceived external stimuli. Outside space is perceived by cognition of an internal model.

3.3. Perceiving "Reality" Through a Virtual Reality Headset

Up until this point in history, virtual reality has been presented in two dimensions, usually on a computer or movie screen. This is because the model is rendered as a scene through the viewpoint of the camera. This two-dimensional, flat presentation of the model understates what virtual reality can do. Now with the advent of virtual reality headsets that all changes.

When you put on a headset, you get a glimpse of what virtual reality can do. With a VR headset, you become immersed in the model. Rather than seeing a framed rendering from the camera view, you are actually in the model - seeing the model from within. When you experience virtual reality through a headset, you are getting very close to seeing how life works in terms of the grand scheme.

Virtual reality headsets bring "the mind as space" concept to life. When working with virtual reality software, you have a camera surrounded by the environment. In life, you have essentially the same thing. You have conscious awareness surrounded by space. Virtual reality experienced through a headset is a demonstration of the mind as space. It provides evidence of the awareness/memory model that we experience during life. With a headset, we can demonstrate how perceptions of the outside world work firsthand.

3.4. The Similarities Between Virtual Reality and Human Reality

While virtual reality is a construct of advanced technology and human reality the fundamental existence of life, the two share remarkable similarities in structure, experience, and interaction.

Sensory Experiences

A striking similarity between VR and human reality lies in their reliance on sensory perception. Human reality is perceived through the five senses: sight, sound, touch, taste, and smell. In VR, these senses are replicated through hardware and software technologies. Visual elements in VR are created using high-definition graphics and head-mounted displays, while spatial audio systems provide directional sound.[20]

Spatial and Temporal Dynamics

Both VR and human reality operate within spatial and temporal frameworks. In human reality, space and time are constants that govern existence. Similarly, VR environments are constructed with virtual spaces that mimic real-world physics, such as gravity, motion, and spatial dimensions. Time in VR can be manipulated to run parallel to or independent of human reality, offering unique experiences such as slow-motion or time-lapse effects.[21]

These dynamics allow VR to mimic the rules of human reality while also offering the flexibility to transcend them, providing users with both familiar and novel experiences.[22]

Interaction and Agency

Interaction is central to both VR and human reality. In human life, agency is exercised through decision-making, movement, and communication. VR environments replicate this through interactive elements that respond to user input. Controllers, motion sensors, and voice recognition systems allow users to navigate and influence virtual spaces in ways analogous to their actions in the real world.[23]

Moreover, VR enables social interaction in shared virtual spaces, paralleling human interactions in physical environments. Multiplayer VR platforms allow individuals to collaborate, communicate, and build relationships, creating communities that mirror those in human reality.[24]

Conclusion

Virtual Reality and human reality share a remarkable set of parallels that encompass sensory perception, spatial dynamics, interaction, and emotional engagements. These similarities not only enhance VR's immersion and relevance but also provoke deeper reflections on the nature of existence itself. As VR technology continues to advance, the boundaries between the virtual and the real may become increasingly indistinguishable. Virtual reality provides a demonstration proving that human reality is the perception of a 3D model constructed within the mind that physically resides inside memory.

4. Conclusion: How 3D Modeling Provides Evidence for Afterlife

4.1. 3D Modeling Basic Concepts of Afterlife

Blender's 3D modeling software provides us with the opportunity to define the basic concepts required to prove afterlife. The geometric concepts are fully defined in Section 2 above. Here is a summary refresher of the concepts and our new 3D modeling definition of each:

1. Physical Space

Physical space, in terms of 3D modeling, is defined as a computer model that resides physically in memory. The model, when read into Blender software, results in an entire three-dimensional space. It is worth noting that this space is unlimited because it is defined using geometry. There are no limits on its physical size. In summary, physical space is a computer model of unlimited length, width, and depth.

2. Awareness

Awareness, in terms of 3D modeling, is defined as the camera. The camera has an associated panel that holds its X, Y, and Z locations within the space. We call the camera awareness when it is active and perceiving the surrounding reality.

3. Time

Time, in terms of 3D modeling, is the timeline within Blender's interface. Time is set by a slider that can be positioned along a timeline.

4. Birth

Birth, in terms of 3D modeling, is the moment the camera turns on. The beginning of life can be defined as the camera turning on with Blender software.

5. Memory

Memory, in terms of 3D software, is the memory of the computer you are working on. Blender software holds the 3D model within memory. All data used in the creation of a virtual reality environment resides in the memory of the computer.

4.2. Geometry Proving Space in Three Dimensions

During life, we are located at the center of our environment. In simple terms, we are awareness or point-of-view that perceives its surrounding space.

When we apply the definitions derived from 3D modeling, the geometry becomes much more precise. First, we define awareness at the center of the environment as a single point as shown in Blender's control panel. There is a difference geometrically between awareness envisioned as a furry ball versus awareness as a precise single point. 3D modeling shows us that the camera exists at just one point within the environment.

The surrounding space, on the other hand, is unlimited in physical size. Since its underlying foundation is based on geometry, it can be any size. A larger physical space does not equate to more bytes required in memory. It is resolution-independent because it is vector-based, not raster-based.

The geometry is such that awareness and memory are mathematical inverses of each other. One is a single point. The other is unlimited space. This gives rise to our awareness/memory model of existence. It states that these two concepts work together. Awareness is the smallest possible thing. Surrounding space is the largest possible thing. The combination of the two, working as inverses, provides the awareness/memory model of life we all live within.

4.3. The Geometry of Spacetime in Four Dimensions

3D modeling has shown us that all moments exist. When we think of past moments, such as a day last week, we think in terms of calling up a memory of that moment. As such memory seems weak and vague. 3D modeling shows us that we can access any moment we like simply by moving the pointer on Blender's Timeline Panel.

Moment A and moment B are the same. If we are in moment A, it does not mean that moment B is any less defined. It only means we are at moment A's location. In 3D software, all moments are stored in memory. They are all the same. We can access any of them we want - future or past, simply by moving the timeline pointer.

Real life operates the same way. Moments of the past are stored in memory. We could, in theory, be inside any moment we want simply by moving a pointer on a timeline. The implications of this concept are profound. This means that inside our memory we have all the moments we have ever experienced. Like 3D software, these are not copies of moments. These are the actual moments. It simply means that the timeline pointer is located at another moment in time.

What we have is the accumulation of all moments ever experienced residing in memory. This gives rise to some interesting geometry. On the one hand, we have awareness as a single point within the environment. Awareness is also located at just one moment in time. Thus, we have one point of awareness in an eternal realm of spacetime.

The mathematical inverse of this single point is all spacetime - unlimited in length, width, depth, and eternal in time. Unlimited space is all physical space ever experienced. Unlimited time is all physical time ever experienced. This realm of unlimited time and space exists within us all. This realm exists within our memory. It will be there, intact, at the last moment of life.

4.4. Awareness in the Realm of Unlimited Spacetime

When we reach the last moment of life, we will be in the presence of a vast realm of time and space. The size of this realm will be on the order of a single point to all space-time. It will be infinitely larger than where we are now.

When we see this realm, we are not going to simply cross a threshold into it. This will not be the same sized awareness within a much larger space. We won't be ourselves. It won't be business as usual as we stride into the Kingdom of Heaven.

What will happen is awareness will expand. It will undergo a dimensional transformation from a point in spacetime to all spacetime. The result of this change of dimension is awareness of unlimited physical size. That means conscious awareness will be everywhere simultaneously. The geometry proves it to be so.

During life we are this single point of awareness. At the end of life, we change dimensions to become its inverse - unlimited awareness throughout space and time. Everything you have ever seen will be there. Everyone you've ever known will be there. You be one with God - pure knowledge and light everywhere.

Proceed To Proof Of Afterlife Evidence - Hyperthymesia

Footnotes

[1] Smith, J., "The Rise of VR and AR in 3D Software," Tech Innovations Quarterly, 2022.

[2] Baddeley, A. D., & Hitch, G. J. (1974). Working memory. The Psychology of Learning and Motivation, 8, 47-89.

[3] Marr, D. (1982). Vision: A Computational Investigation into the Human Representation and Processing of Visual Information.

[4] Blender Foundation. "History of Blender." Blender.org. Accessed January 24, 2025.

[5] Roosendaal, Ton. "The Free Blender Campaign." Blender Foundation, 2002.

[6] Blender Institute. "Open Movie Projects." Blender.org. Accessed January 24, 2025.

[7] Purchased on sketchfab.com. Developed By Denniswoo1993

[8] Hubel, D. H., & Wiesel, T. N. (1968). Receptive fields and functional architecture of monkey striate cortex. Journal of Physiology, 195(1), 215-243.

[9] Andersen, R. A., & Cui, H. (2009). Intention, action planning, and decision making in parietal-frontal circuits. Neuron, 63(5), 568-583.

[10] Culham, J. C., & Kanwisher, N. G. (2001). Neuroimaging of cognitive functions in human parietal cortex. Current Opinion in Neurobiology, 11(2), 157-163.

[11] O'Keefe, J., & Nadel, L. (1978). The Hippocampus as a Cognitive Map. Oxford University Press.

[12] Halligan, P. W., & Marshall, J. C. (1998). Spatial neglect: A clinical handbook for diagnosis and treatment. Journal of Neurology, Neurosurgery & Psychiatry, 65(5), 678.

[13] Kourtzi, Z., & Kanwisher, N. (2001). Representation of perceived object shape by the human lateral occipital complex. Science, 293(5534), 1506-1509.

[14] Goodale, M. A., & Milner, A. D. (1992). Separate visual pathways for perception and action. Trends in Neurosciences, 15(1), 20-25.

[15] LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436-444.

[16] Frith, Chris. Making Up the Mind: How the Brain Creates Our Mental World. Blackwell Publishing, 2007.

[17] Sacks, Oliver. Hallucinations. Alfred A. Knopf, 2012.

[18] Kahneman, Daniel. Thinking, Fast and Slow. Farrar, Straus and Giroux, 2011.

[19] Piaget, Jean. The Construction of Reality in the Child. Routledge, 1955.

[20] Slater, Mel, and Sylvia Wilbur. "A Framework for Immersive Virtual Environments (FIVE): Speculations on the Role of Presence in Virtual Environments." Presence: Teleoperators and Virtual Environments, 1997.

[21] Schroeder, Ralph. "Social Interaction in Virtual Environments: Key Issues, Common Themes, and a Framework for Research." Presence: Teleoperators and Virtual Environments, 2002.

[22] Bostrom, Nick. "Are You Living in a Computer Simulation?" Philosophical Quarterly, 2003.

[23] Riva, Giuseppe, and Brenda K. Wiederhold. "The Neuroscience of Virtual Reality: From Virtual Exposure to Embodied Medicine." Cyberpsychology, Behavior, and Social Networking, 2020.

[24] Schroeder, Ralph. "Social Interaction in Virtual Environments: Key Issues, Common Themes, and a Framework for Research." Presence: Teleoperators and Virtual Environments, 2002.

Bibliography

• Andersen, R. A., & Cui, H. (2009). Intention, action planning, and decision making in parietal-frontal circuits. Neuron, 63(5), 568-583.

• Baddeley, A. D., & Hitch, G. J. (1974). Working memory. The Psychology of Learning and Motivation, 8, 47-89.

• Blender Foundation. "History of Blender." https://www.blender.org.

• Blender Institute. "Open Movie Projects." https://www.blender.org.

• Bostrom, Nick. "Are You Living in a Computer Simulation?" Philosophical Quarterly, 2003.

• Culham, J. C., & Kanwisher, N. G. (2001). Neuroimaging of cognitive functions in human parietal cortex. Current Opinion in Neurobiology, 11(2), 157-163.

• Foley, J. D., van Dam, A., Feiner, S. K., & Hughes, J. F. (1996). Computer Graphics: Principles and Practice. Addison-Wesley.

• Frith, Chris. Making Up the Mind: How the Brain Creates Our Mental World. Blackwell Publishing, 2007.

• Goodale, M. A., & Milner, A. D. (1992). Separate visual pathways for perception and action. Trends in Neurosciences, 15(1), 20-25.

• Halligan, P. W., & Marshall, J. C. (1998). Spatial neglect: A clinical handbook for diagnosis and treatment. Journal of Neurology, Neurosurgery & Psychiatry, 65(5), 678.

• Harvey, M., & Rossit, S. (2012). Visuospatial neglect in action. Neuropsychologia, 50(6), 1018-1028.

• Hubel, D. H., & Wiesel, T. N. (1968). Receptive fields and functional architecture of monkey striate cortex. Journal of Physiology, 195(1), 215-243.

• Kahneman, Daniel. Thinking, Fast and Slow. Farrar, Straus and Giroux, 2011.

• Kant, Immanuel. Critique of Pure Reason. Translated by Norman Kemp Smith, Macmillan, 1929.

• Kourtzi, Z., & Kanwisher, N. (2001). Representation of perceived object shape by the human lateral occipital complex. Science, 293(5534), 1506-1509.

• LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436-444.

• Marr, D. (1982). Vision: A Computational Investigation into the Human Representation and Processing of Visual Information. W.H. Freeman and Company.

• O'Keefe, J., & Nadel, L. (1978). The Hippocampus as a Cognitive Map. Oxford University Press.

• Piaget, Jean. The Construction of Reality in the Child. Routledge, 1955.

• Roosendaal, Ton. "The Free Blender Campaign." Blender Foundation, 2002.

• Sacks, Oliver. Hallucinations. Alfred A. Knopf, 2012.

• Schroeder, Ralph. "Social Interaction in Virtual Environments: Key Issues, Common Themes, and a Framework for Research." Presence: Teleoperators and Virtual Environments, 2002.

• Smith, J., "The Rise of VR and AR in 3D Software," Tech Innovations Quarterly, 2022.